Layperson's Description:

Grassland is peer-to-peer networked, AI software that takes video camera footage and remembers and stores the individual features, movements and geo-locations of every person, vehicle, building and relevant object as a virtual, 3D simulation in real time. The software shares only the relevant 3D data with the rest of the network so they can all have a complete simulation of what they're all seeing around the world.

Grassland software was created in 2018 after I was asked (April 13, 2018; ~3:20 PM) to solve a problem faced by Facebook's AI research labs in Montreal of how to give machines an intuitive understanding of the laws of physics from their own independent, real world experiences. (This was likely due to some previous discoveries I'd made in this field[7].) The problem intrigued me, so I went back to my home in Ottawa and a month later discovered a solution that required a new theory of geometry. The mathematics also made it clear that any software implementation that would satisfy the necessary requirements must, by definition, be "trustless", antifragile and create a "Prisoner's Dilemma", all in favour of increasing its independent experiences. Trustless, meaning that the entire system is designed so that it'll always work in the way it's supposed to even if the entities that it interacts with in the real world aren't trustworthy. And antifragile, meaning that because attacks, volatility, stressors, etc. are a natural part of the real world, its discernment should improve as a result of experiencing them. And a Prisoner's Dilemma since it would provide any AI that "defects" to it with a self-perpetuating advantage in robotic intelligence and situational awareness since through it any internet connected machine could "walk through" and "experience" people's entire lives or the "life" of any object from all positions, perspectives, and timestamps simultaneously. And so, it was unnecessary to add typical software restrictions since they would compromise its independence and place unnatural impediments on its growth and proliferation. And its structure would therefore be an emergent property of human self interest just like humans themselves are simply an emergent property of the self interest of their microorganisms.

Although the data is all the same for everyone, it's just a model of the real world, anyone can build both public and private applications for people specifically based on what problems they want to solve using that data. It could be for finding lost children, helping a hedge fund model a retail store or factory's performance to predict quarterly earnings, giving an insurance company the tools to model and assess their risk portfolio or helping a city solve their traffic and emergency response problems. (See "Use Cases" below)

Technical Description:

Grassland is a P2P robotic vision and navigation system that is self-organizing, self-correcting and self-financing. The software efficiently scans any 2D video feed from any camera to generate a compressed, searchable, timestamped, real-time, 5D+ simulation of the world. The network's distributed API freely gives any machine complete situational awareness so that they can understand and trustlessly navigate any environment with no restrictions.

Grassland is politically stateless and permissionless; anyone can take part. Every node in the network has a permissionless and public API giving any external application or computer free access to Grassland data across the entire network, letting any internet connected object trustlessly internalize, understand and interact intuitively with both past and present states of the real world and respond to even the tiniest changes taking place around the globe. While the combined work of the network makes it computationally intractable for nodes to submit fake data (see proof-of-work description below).

Postulates:

The three ('1','2','3') postulates/axioms below were considered to be "mathematically axiomatic". To accurately model a system like Grassland, in which the necessity for predictability cannot be overstated, it was sufficient to just regard each node (software instance) as an economic agent; human agency was not necessary since it becomes entirely subsequential as life gets enveloped, modelled and mirrored within. To put it simply, I took the following three postulates, and therefore the system they define, as "true" (as defined by "realism") on assumption only, then proceeded to deduce a programmatic theorem that naturally follows from, satisfies and is a logical consequence (not necessarily a causal connection) of those postulates--not quite 'features', but a 'logical framework'. The Grassland algorithm (and its underlying equations to be delivered in a follow-on supplement) is put foward as a proof of that theorem.

|

|

1. Trustless: There exists a computer networking system ("system" hereafter) wherein because successfully submitting fake data (see "Closed Under Computation") in the system approaches the limit of computational intractability, all of its nodes (or artificial economic "entities") find it more profitable to be honest.

|

|

2. Economic Incentive: There exists a system wherein as long as its entities are at least acting in their own economic self-interest the system would undergo continual expansion (in our case, the remaining "dark" areas of the map will be "lightened up").

|

|

3. Data Symmetry: There exists a system wherein no entity could maintain a data asymmetry (e.g. one-sided surveillance, stochastic (non-deterministic) outputs, etc.) so long as there are other entities at least acting in their own self interest. (A "scorched earth policy")

|

|

Closed[3] Under Computation:

It follows then that all necessary distinction between data that's valid or invalid, as defined by the system's utility is entirely "closed" under the system's proof-of-work. That is, nothing more than a "universally available method of computation", Δ, acting upon the network's federated data, Ε, is needed to determine Ε's validity or invalidity to the level of certainty that satisfies the requirements for utility, μ, tacitly "agreed" upon by the system's entities (since that's how we defined an entity above), such that the greater the total amount of computation, Δ, within the system the greater its capacity to validate Ε. And thus have no need of externalities not "closed under [its] computation" that require privileged access, specific locality, exclusive information, etc.

Therefore Δ is deterministic and is computable over any element of Ε within some reasonable amount of time, ψ, as determined by the requirements for utility, μ (and therefore a reasonable amount of computation). Such that, for any given ε ∈ Ε, Δ(ε) is the same for any of the system's entities that computes Δ(ε). And for any given ε ∈ Ε, Δ(ε) computed by any given entity of the system in time t, where t lies somewhere on the interval [0, ψ) and ψ is some small, positive real number. Moreover if t > ψ, and therefore not reasonable with respect to μ, none of the postulates could be satisfied since data symmetry could not be maintained or data validated within a practical or economically feasible timeframe. (In our case, speaking in a practical sense such determinism requires an implementation with the highest possible guarantee of consistent and expected behaviour between the entities because all entities must accept and reject the exact same data (binary sequences) and all within a certain amount of time.

|

|

Recursive Subjective Value Substitution via Entropy: It follows then that because the system commodifies and effectively discounts its socio-economic and behavioural data to zero, since it's no longer exclusive but ubiquitous, the 'economic incentive' left to each entity would therefore be the end of a subjective value substitution that constantly shifts away from the 'signified', the thermodynamic and Shannon entropy of its continual data generation, towards the only thing else that remains, its new, resultant 'sign'. With which, at every instance, the entity's entire subjective value must, by what its continued behaviour now suggests it to consistently act so as to increase, be completely, irreversibly, and recursively associated. Whose 'signified' is the irrefutably entropic instantiation of this artificially generated reward (as far as this system's underlying equations, which will be published in a follow-on supplement, are concerned, the data is, metaphorically speaking just a ubiquitously broadcasted "carrier signal" upon which the proof-of-work is encoded). To the extent that for every quanta/bit of information (certainty) gained for the entities of the system there is an associated, antecedent bit lost (or "anti-bit gained", so to speak) in entropy, whether they decode it as being Shannon or thermal.

|

|

Software Features:

-

Economically Self-Interested Nodes (i.e. How Nodes Find a Nash Equilibria[2])

-

Each software instance (node) operates under the assumption all other nodes are acting in their own economic self-interest, weighing their own opportunity costs of expending computational resources.

-

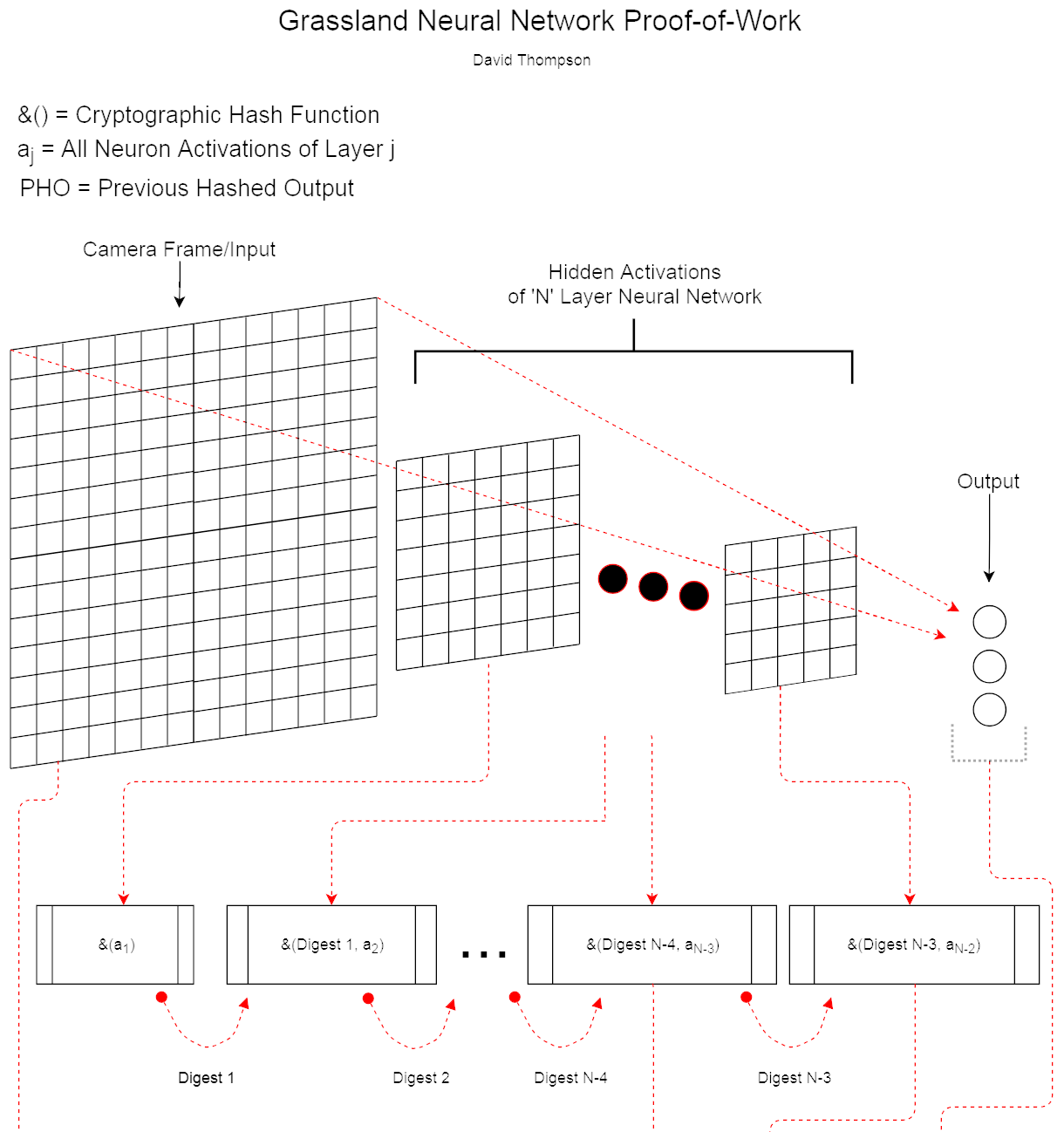

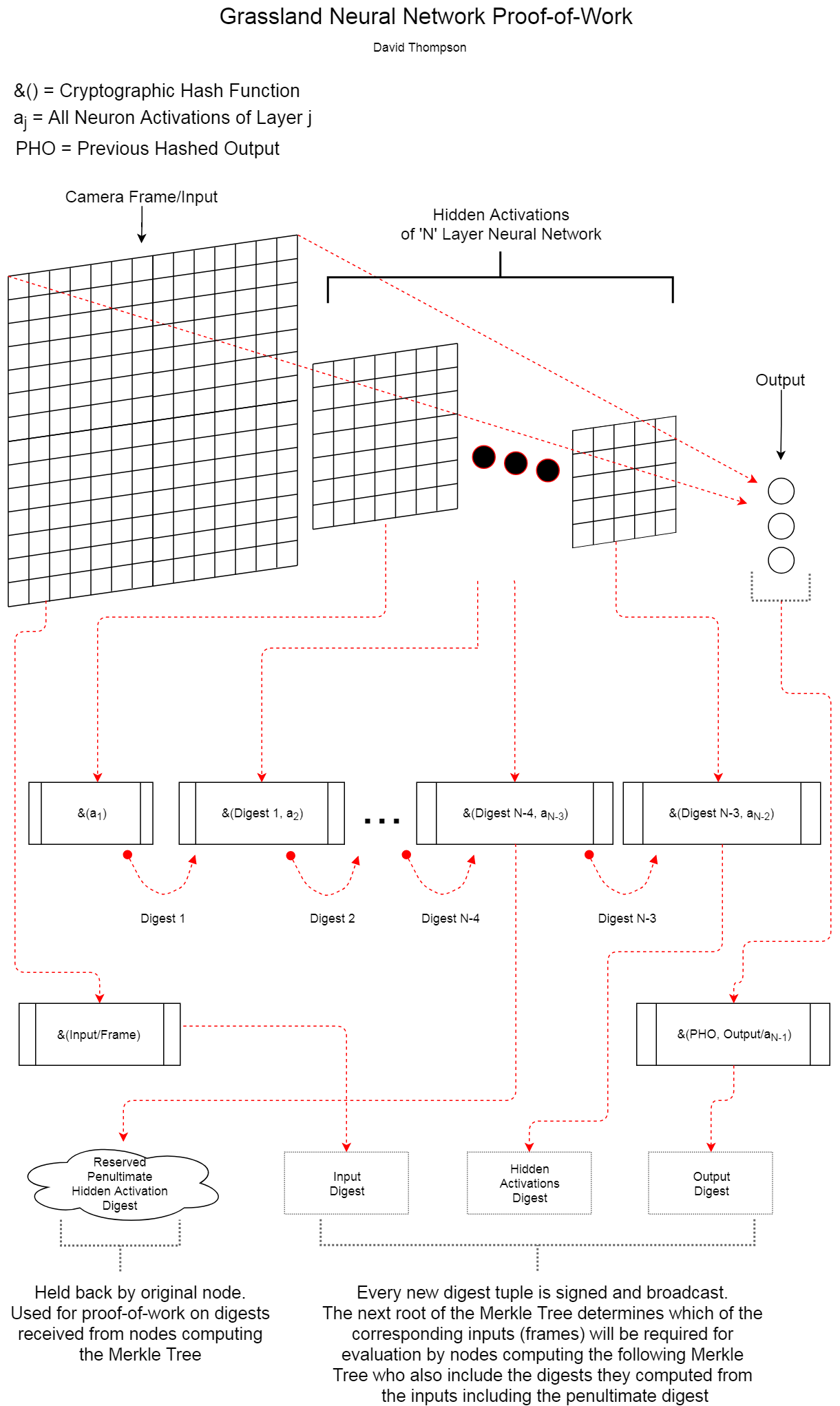

The network ensures all data must have passed through the hidden layers of the current AI/object-detection model (see diagram below), a non-trivial computation, the additional benefit of which is that the only strategy for a rational, self-interested node is to contribute to the network and makes the production of passable fake data prohibitively expensive.

-

This proof-of-work algorithm also generates a reward for the node performing the detections backed by statistical proof easily verifiable by other nodes that economically scarce computational resources were expended to process each frame correctly, effectively "trading" whatever currency their compute costs are denominated in, thereby rewarding continued operation and node territorial expansion.

-

Validity of the data in a region increases with the density of nodes in that region. But as long as a node's neural network output satisfies the proof-of-work it'll still get the reward regardless of density; the network has proof the node expended computational resources. It's still buying into the network and gets its share of the reward. Therefore, because the size of this "pie" never increases but the total amount spent to get an equal slice of it does, this increase in value to the wallets of all nodes satisfies Postulate 2, the system's "Economic Incentive".

-

Camera frames aren't stored in the database, just information about the objects it "sees" as determined by the output of the neural network and a hash of that same information (see diagram below). The frames themselves are only needed for a little while after for random confirmations (where selected frames are distributed peer-to-peer over IPFS) and then they can be discarded.

-

Since each database entry is also a proof-of-work, storage costs never exceed the value the market has placed on the data. Not to mention its intrinsic value

-

Prevents any party, inside or outside the network, forming an asymmetry (one-sided data gathering of human behaviour) as long as there remain Grassland nodes acting in their own self-interest.

-

Steadily Increasing Detail And Difficulty

-

As the sum total of the network's computational throughput changes, the computational requirements for each node changes as well; e.g. if the average throughput increases, demand has increased therefore nodes must increase their individual FPS. This has the added benefit of making the opportunity costs of fabricating data an increasingly non-viable endeavour.

-

Every few months ("eons"), nodes download additional layers for the network's deep learning model in order to recognize more objects and activities with greater certainty. So over time the network becomes an increasingly accurate and harder to fabricate representation of the real world.

-

With each new model version, there should be a marginal decrease in the network's uncertainty of the probable object paths within the "foggy" areas of the map beyond any node's field of view.

-

Since nodes are effectively earning the reward with whatever currency their compute costs are denominated in but the amount rewarded for the neural network's output remains the same, as detail and computational requirements increase, they're essentially "buying" the reward at a higher and higher cost, increasing the "rarity" of those already in possession while at the same time increasing the intrinsic value (usefulness) of the distributed data since this computational increase is the result of more and more detailed models. This rewards those who took earlier and therefore more "computational risk" and those who "lighten" more "dark" areas of the map.

-

Growing The Merkle Tree (formerly titled 'Unrewriteable History And Reasonable Storage Requirements')

-

Several times a day, the last output digests from each node, the tip of each of their rolling hashes (see diagram below), are hashed into a Merkle Tree. The tree's leaves are paired based on node geospatial proximity along longitudinal bands. A sort of "Longitude (Row) Major Order"

-

The Merkle Tree is distributed to all nodes. Most nodes don't need to keep the entire database just their branch of the Merkle Tree

-

Storage of the entire database is only truly a problem when storage costs exceed the market value of what's being stored, however, as stated earlier, since each database entry is also a proof-of-work, storage costs never exceed the value the market has placed on the data.

-

Each new Merkle root determines which frames from each node's pre-published digest tuples are required for evaluation.

-

Since flipping a single bit of a frame captured by one node would give it a completely different set of rolling hashes which upon entering the global Merkle Tree would have produced an entirely different root hash, once it's set and distributed (upon which it starts being hashed into the upcoming Merkle Tree), it would take exponentially more processing power than is available on the planet to successfully create an alternative version of Grassland's history of events.

-

The process of producing the next Merkle Tree is a competition any node can compete in. A person who cultivates real trees is an Arborist so we'll use that term. Our "arborists" will also need to perform inference on the frames that were randomly selected for evaluation based on the last Merkle root, and will need to publish each frame's digest tuple and the "reserved digest" (see diagram). If the original node holding the reserved digest signs the arborist's digest, it generates that node's reward and a percentage goes to the arborist. But that will only propogate if that arborist's tree is also accepted by a majority of the network. Therefore, as long as (1) there is always active competition to compute the Merkle Tree by statistically verifying the work of all other nodes and (2) over 50% of every active node prevents inflation by rejecting trees that don't pass their proof-of-work, then all rational (self-interested) nodes will have to be honest.